It’s been a while since you started working with Python microservices; they’re great, but not perfect. You now have hundreds of microservices, and managing communication between them is becoming a full-time job.

If you were to draw a diagram of the information flow today, it would be obsolete by next month.

Let’s dive into a new subject that will help you boost your Python microservices skills with the mightiness of AWS.

First, a short recap will help you remember the AWS topics we’ve tackled so far:

- In my first article, I focused on how to start your Java project quickly on AWS.

- My second article was about improving your PHP microservices with AWS.

When there’s an issue in one of your environments, finding the root cause is no easy task.

Sometimes, your catalog service is failing because your price service is failing. The price service is failing because your ERP service is down. (I’m not saying your ERP system is a microservice, but a connector to ERP could be).

The whole point of separating your monolith into microservices was to enable your teams to increase velocity by using whatever tools and languages were best suited for the job. Scaling components independently is a close second on the list of reasons.

That’s life: a new tool might solve 80% of your old problems, but will create 20% new ones.

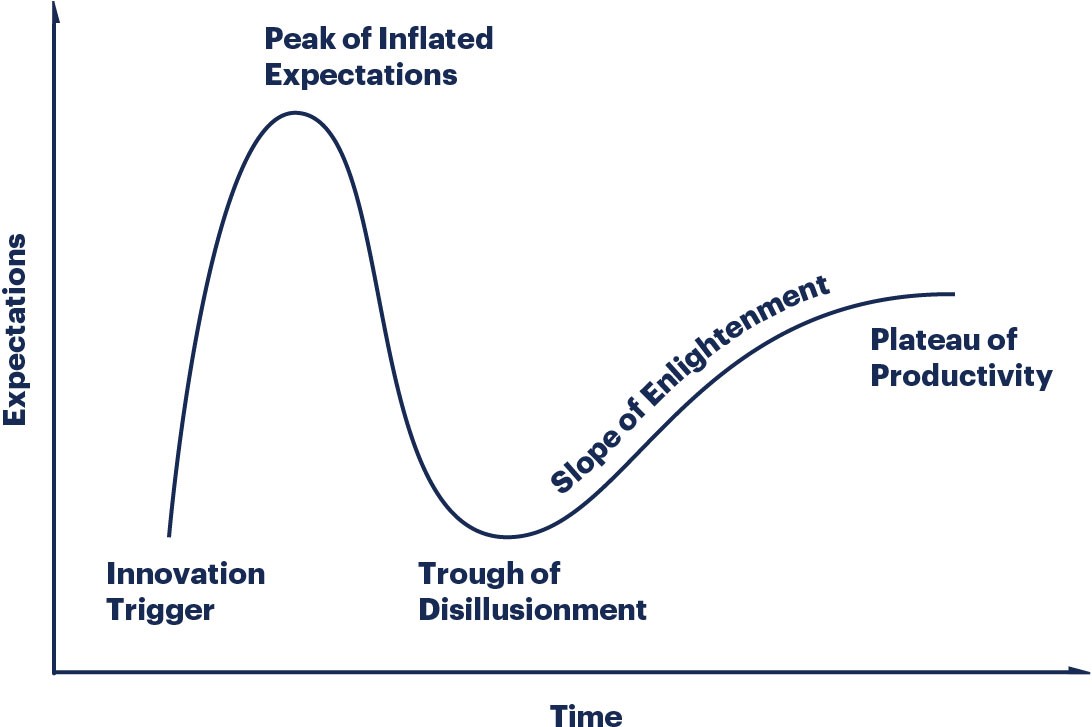

This is the Gartner hype cycle. It’s a good idea to look at it before you start playing with a new shiny thing – it will mentally prepare you for the learning process ahead.

Today, I’m mentioning it because I want to share what Gartner said in their “Hype Cycle for Application Architecture and Development, 2019”, published in August 2019.

According to them, microservices are “Sliding into the Trough” (the valley in the picture above). I’d like to think that you and I are already climbing the slope, but I guess time will tell.

How can we handle these “wonderful” new issues added by our Python microservices?

Observability

It’s a new buzzword, or an old one, depending on how often you go to conferences. Google the term – as I did when I first heard it – and you’ll find academic definitions from “Control Theory.”

What observability really means (in my opinion) is that we’ve used tools such as:

- application and infrastructure metrics

- application and infrastructure logging

- distributed tracing

All centralized in a place where we can easily search and view them to understand what’s happening in our system (no releases with new debug call necessary).

Don’t close the tab!

I’m not saying you should change all of your Python microservices to achieve this. In fact, this is pretty easy to do if you’re in AWS.

Let’s start from the beginning: if you’re using microservices, chances are you’re also using docker. The flavor of the tool is usually less important since what I’m going to suggest works with Kubernetes (Elastic Kubernetes Service, if you’re managing the cluster yourself, please reconsider that practice) and ECS (Elastic Container Service) which is their native offering.

Now, you should turn on “Container Insights”, which is your ready-made dashboard that will give all sorts of information about your microservices (CPU, memory, disk, and network).

You can view this information on three levels:

- Clusters

- Services

- Tasks

Please make sure that you output your container logs properly so that CloudWatch can aggregate them for you to search.

Searching through logs is now much easier with “CloudWatch Log Insights”, a service that gives you the ability to search your logs in an “SQL” manner, replacing the need to pay for an Elastic Search cluster.

There’s a lot more to do in this department, but this is a good start!

Service mesh

You should consider using a service mesh for your communication layer. Instead of changing each microservice to add features that give you more visibility over the flow of data (if you use multiple programming languages, this is not easy), you add a service mesh on top.

A service mesh (in our case AppMesh) behaves as a proxy between all of your Python microservices managing all of the traffic. This is great since now your microservices don’t have to change.

A service mesh is not for everyone. I would consider the following uses:

- A large number of microservices

- Services in more than one compute platform (EC2, Elastic Kubernetes Services, ECS)

- A/B testing

- Canary deploys

- Traffic control needs

Interested in services meshes and how to improve your microservices? Watch the replay of our webinar, where we show you a full example of using AppMesh together with Python Microservices.

Stay tuned, more is coming! Subscribe to the next webinar where I’ll present “Serverless Web Applications with Node.js and AWS.”

Read more:

Amazon Web Services: Deliver High-Quality Solutions Fast